This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Customer

For the client, which is one of the five largest banks in Europe, we built a model to predict customer churn.

Challenge

When customers leave, banks lose potential revenue. In turn, acquiring new customers requires more money than retaining current ones.

Until now, the bank had been working on its model, which predicted customer departures rather than directly helping reduce them. The model’s structure did not allow the bank to do the right thing, as signals of abandonment were always caught too late. This meant that because of the approach used, the model was not aligned with the business goals.

Solution

Analysis of business needs and existing model

During a workshop with the client, we analyzed their business needs and the shortcomings of the existing model. We concluded that if, for example, we found that the customer wanted to cancel the account only when the salary stopped coming into his account, it would be too late to retain the customer. This was one of the premises on which the existing model was based.

The important thing is that the actual purpose of building the model is to reduce the rate of customer departures. Predictive quitting is just a tool that should be used early enough after a customer decides to leave to have a chance of retaining the customer.

Improving the existing banking model

To assess the chance of a customer leaving, we decided to use more subtle signals, such as a change in a bank account holder’s activity by reducing the number of outgoing transfers.

The signals were early enough to take action to reduce churn. We configured an internal on-premise infrastructure and developed the appropriate code to perform the model-building process on relatively large data.

The model was built in the traditional way: using monthly aggregated data describing the behavior of several million customers.

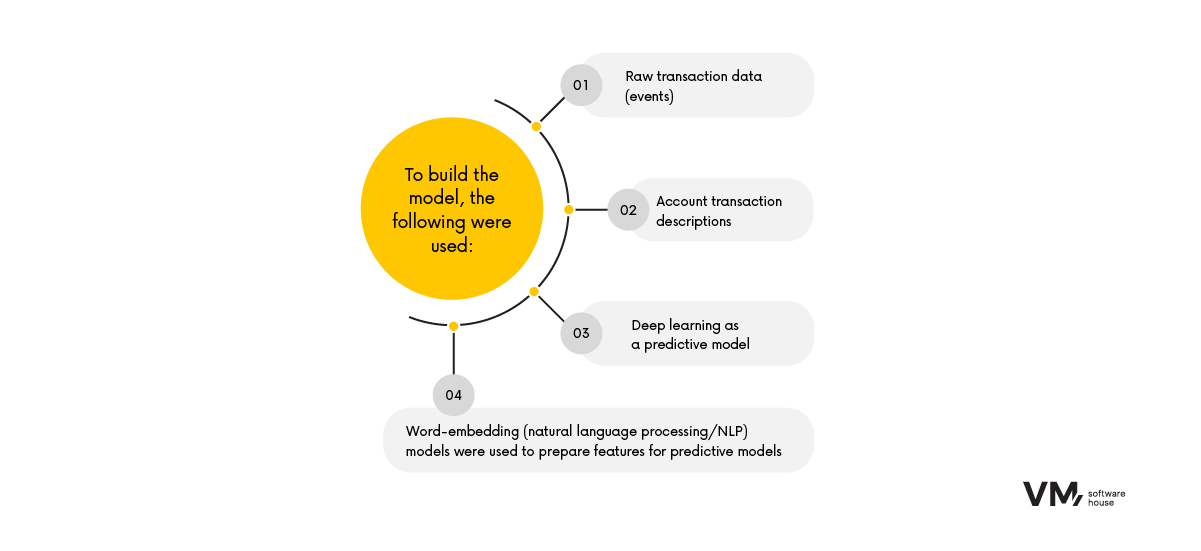

We conducted additional experiments using non-standard approaches and non-aggregated atomic data. We used the following, among others:

- Raw transaction data (events)

- Account transaction descriptions

- Deep learning as a predictive model

- Word-embedding (natural language processing/NLP) models were used to prepare features for predictive models. This made it possible to convert textual data into a numerical form that machine learning algorithms could understand. In addition, it could be used to capture the contextual essence of words, their similarity, and their relationship to other words.

Results

- Reducing the churn rate by 6% of its value.

- Familiarizing the bank’s data science team with new modeling approaches that can be applied to other problems (know-how transfer).

- The computing infrastructure we set up was used to solve other modeling problems.

Technologies

Design, Development, DevOps or Cloud – which team do you need to speed up work on your projects?

Chat with your consultation partners to see if we are a good match.