Every CTO (Chief Technology Officer) knows that software should be written to be easy to scale. Addressing this aspect in the software development process allows for the full utilization of available resources and high performance. How can the cost of scaling be estimated so that it achieves its goal without consuming the organization’s budget?

As IT budgets tighten and companies face pressure to cut costs, maintaining and managing scaled applications is becoming a real challenge. According to Pluralsight, the cost of systems administration, monitoring, backup, and upgrades can account for as much as 20-30% of the total IT budget.

Does this mean you have to implement expensive scaling strategies right away? Not at all; many experienced leaders have found that scaling applications cost-effectively is the key to success.

Table of Contents

What is Application Scaling?

Scaling strategy refers to the application’s ability to handle increasing load, users, data, or other resources without a significant drop in performance or reliability. In the application world, these conditions depend on the amount of data or the number of operations that must be processed at any given time. It is a matter of preparing so that the infrastructure running the application can handle all this load.

Therefore, when building an application, you need to design it to support a larger number of users while maintaining consistent performance. This will save money in the long run by reducing the need for major changes and upgrades.

It is necessary to use scalable technologies and infrastructure, such as cloud-based solutions or microservices architecture so that our application grows over time.

So, let’s move on to the five factors affecting successful system scaling.

1. Know Your System’s Needs

The first step to estimating and controlling cloud application costs is understanding your scaling needs. Here, too, it is worth asking questions:

- What are the project’s goals? What is the application’s type, size, and complexity? What response time is acceptable? How much will I spend to cut the response time in half? Is the application designed in such a way that this is even possible?

- Do all parts of my system and all functions need to be equally efficient, or does only part of the functionality need more efficiency? If certain parts require higher performance, it’s a good idea to identify them at this level early on. This will save costs in the implementation phase.

- Do I know my biggest cost drivers? Not just servers but also data and usage patterns.

- Do we have defined scaling metrics, such as CPU usage, memory usage, response time, or throughput?

This first stage of analysis is crucial. Many CTOs stress that you can overspend on MVP research and development by “pre-scaling” but fail to achieve the goal.

This happens when we invest in scaling functions that we don’t need or do so too soon before we generate actual revenue.

On the other hand, anticipating traffic growth is necessary because a lack of planning may result in the departure of dissatisfied app users.

2. Find Application Bottlenecks

Before we build scaling strategies, we need to identify the points that may limit application performance. There are three fundamental bottlenecks for any website:

- Not enough processor power to handle the number of users,

- Lack of sufficient memory to perform operations with the data needed for this purpose,

- Not enough input/output to send data to them fast enough.

If you increase one of these three elements, you will eventually run out of one of the other elements to handle more traffic. To overcome these challenges, you can use vertical or horizontal scaling.

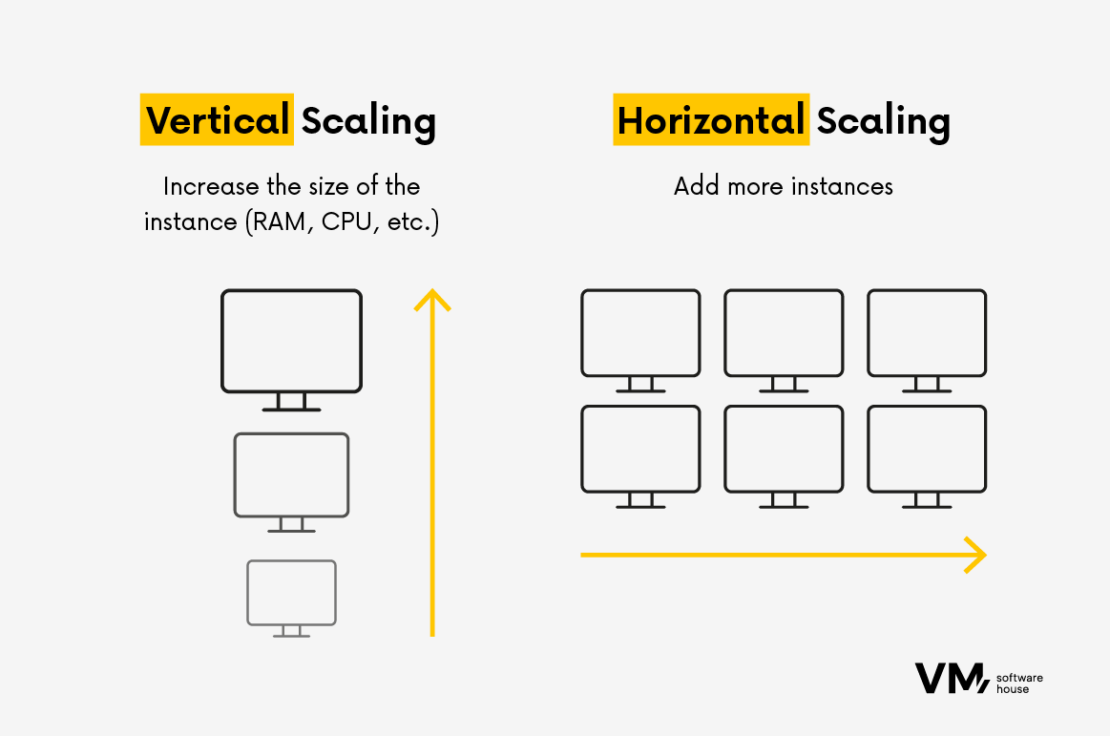

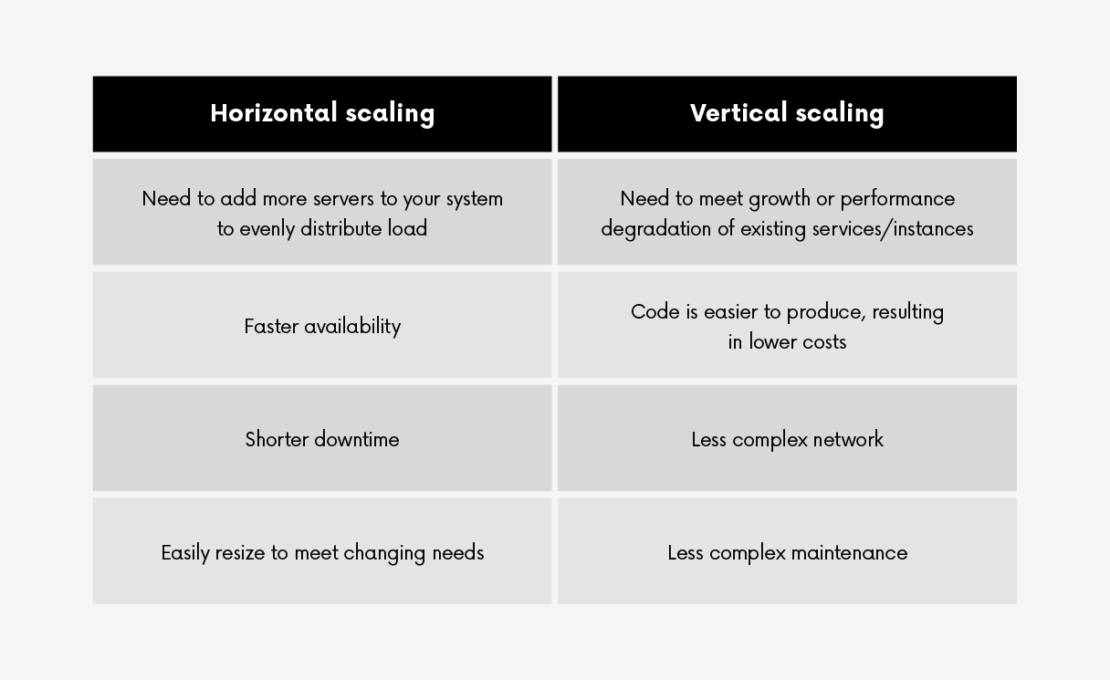

Horizontal Scaling vs. Vertical Scaling

Vertical scaling, also known as scaling up or down, means increasing the capacity of an existing server by adding more power. It is a quick solution but has its limitations.

As with desktops, adding a better processor, more memory, or an SSD will make them faster. Increasing the processor, memory, and operations will generally increase the number of users you can support. But at some point, a problem arises: It becomes increasingly difficult and expensive to create a larger server.

Hiring people to find and fix performance problems in the code is generally very time-consuming, and it means they don’t spend time adding new features or doing other things that might attract new users.

Therefore, horizontal scaling is used in most cases, which involves adding more servers. This approach is more flexible and cost-effective but requires a careful architecture to manage complexity.

Services that automate scaling most often use horizontal scaling. Cloud services such as Heroku, AWS Auto Scaling, etc., dynamically add new servers as traffic increases and then scale them down proportionally.

The choice between horizontal and vertical scaling depends on the complexity of the application: vertical scaling suits simpler, less integrated applications, while horizontal scaling is better for complex applications with multiple microservices or interconnected systems.

3. Choose the Right Scaling Strategy

Application scaling should be flexible enough to automatically scale up and down depending on the workload, ensuring high application availability while reducing costs.

Now, we will discuss some scaling techniques to consider when optimizing your application.

1. Load Balancing

Load balancing distributes incoming traffic across multiple servers to ensure no single server is overloaded. This increases resource utilization, maximizes throughput, and minimizes response time. Popular strategies include:

- Round Robin: Sequential distribution of requests to each server.

- Least Connections: Direct requests to the server with the least number of connections.

- IP Hash: Direct requests from the same IP address to the same server, ensuring session persistence.

2. Database Sharding

Database sharding involves distributing a single data set across multiple databases (shards). This distributes read requests among several databases, reducing the load on each database by distributing read and write operations.

3. Introducing Cache

Caching stores copies of frequently used data in high-speed storage for quick retrieval. Types of caching include:

- Reduction of redundant data operations.

- Caching entire HTML pages to avoid repetitive rendering.

4. Microservices Architecture

Microservices divide applications into independent components, each focusing on a specific function. If we talk about such an example of microservices, scalability is about running several instances of the same software and distributing traffic among them. Because of the high traffic, they need to be reliable but always available and respectful so as not to negatively affect performance.

Examples of microservices found in many popular applications include:

- Search engines

- Document generation

- Authorization and authentication

The benefits of using microservices include improved system performance, easier management of the software architecture, scaling at the level of a given service rather than the entire system, and reduced risk of errors in other modules. All this translates into lower development costs.

5 Asynchronous Processing

Asynchronous processing allows tasks to be handled independently of the main application thread. Offloading heavy operations, such as image uploads, to background processes frees up resources for real-time tasks.

6 Autoscaling

Auto-scaling dynamically adjusts resources based on real-time needs. Services are available on AWS and Google Cloud platforms. Solutions such as AWS Auto Scaling or Google Cloud’s Compute Engine offer solutions to automatically scale resources up or down, providing optimal performance that can help optimize costs for cloud-enabled services.

7. Open-Source In-Memory Computing

Open-source solutions for in-memory computing, such as Apache Ignite, provide a cost-effective way to scale a system while reducing latency quickly.

Apache Ignite combines available processors and RAM into a cluster, distributing data and calculations to individual nodes. By storing data in RAM and taking advantage of massive parallel processing, this significantly increases performance.

8. Content Display Network (CDN)

CDNs distribute content across multiple geographically dispersed servers, reducing load times and improving the user experience worldwide. It uses many servers spread across the globe to deliver content to site users.

Servers such as Cloudflare and Akamai cache a copy of the site information, and users accessing the site will use the CDN server, not our server, so any level of traffic can be handled.

4. Use Automation and Monitoring Tools

Automation tools help implement a scaling strategy by automatically adjusting the resources allocated to an application based on predefined metrics and thresholds.

Popular tools include:

- Performance Monitoring: New Relic or Grafana provide real-time insight into performance issues.

- Message Queuing: RabbitMQ helps manage real-time bottleneck processing.

- Load Testing: Apache JMeter or LoadRunner simulates heavy traffic to reveal weaknesses.

- Code Profiling: JProfiler for Java or Py-Spy for Python analyzes code execution to check for inefficiencies.

Continuous monitoring and analytics provide insight into user performance and behavior. Tools such as Splunk or Grafana Stack help you understand and predict traffic patterns, enabling you to make essential scaling decisions.

5. Implement Cost Optimization Techniques

Cost optimization techniques help reduce or avoid unnecessary or excessive costs associated with scaling cloud applications. For example, you can:

- Choose the right cloud provider and pricing model for your application, leverage cloud-native services and features that offer scalability, performance, and security at a lower cost,

- Apply design principles and best practices that enhance the performance, modularity, and maintainability of applications,

- Adopt a DevOps culture and methodology that enables continuous integration, delivery, and feedback,

- Regularly review and audit cloud accounts and usage reports to take corrective action.

6. Test and Evaluate Scaling Solutions

Testing and evaluating scaling solutions can also help measure the impact of scaling decisions on application performance, availability, and cost. Different tests, such as load testing, stress testing, resiliency testing, or chaos testing, should be conducted to simulate various scenarios and conditions affecting application scaling.

Test results and feedback should also be collected, analyzed, and used to improve scaling solutions.

Scale with the Future in Mind

We hope the above tips will help you manage your application development, whether you are currently coping with a surge in demand or planning for such increases.

It is worth remembering that scalability is a critical process from a business perspective. As you work to meet your application development needs, it’s important to think now about how to sustain growth over the long term. If you need help with solution architecture, consult with our engineers. We will happily help you plan and execute your application scaling strategy.