Table of Contents

Why Flexibility in AI Implementation Is Critical for Businesses

Artificial intelligence is becoming the foundation of digital transformation for enterprises. From office process automation and data analysis to supporting strategic decision-making—its role is continuously expanding. But as capabilities grow, so do expectations around security, regulatory compliance, and cost efficiency.

65% of companies cite data security as the biggest barrier to broader AI adoption (McKinsey, 2024). Meanwhile, Gartner forecasts that by 2026, 75% of large organizations will deploy hybrid AI models, combining public cloud, private environments, and RAG architectures.

That’s why deployment flexibility is becoming a critical factor—different industries require different approaches, and there is no one-size-fits-all solution.

Top Challenges in Implementing AI in Business

Many companies face obstacles before launching an AI project that block effective use of the technology. The most common concerns include data security and compliance, lack of in-house expertise in MLOps, and uncertainty around long-term costs. Large organizations also struggle with integrating fragmented systems and knowledge bases, which directly impacts the performance of language models.

Integrating AI in Business – Architecture, APIs, and Security

Integrating AI into existing business processes requires well-thought-out architecture. Cloud-based solutions (e.g., Copilot, ChatGPT) typically integrate through ready-made plugins and APIs, which shortens implementation time. Private LLMs also provide API access. A key challenge, however, is maintaining the server infrastructure for the model itself. Beyond that, integration components such as data management, authentication, business logic, and user interfaces are similar for both cloud and privately hosted LLMs.

For RAG (retrieval-augmented generation), the cornerstone is implementing an advanced OCR system, a vector database, and a content pipeline that enables real-time updates. Only well-designed integration ensures that AI functions not as an “add-on” but as a seamless part of the IT ecosystem.

Copilot and ChatGPT – How to Quickly Deploy Cloud-Based AI in Your Business

Solutions like Microsoft Copilot or ChatGPT allow organizations to start using AI within just a few days, without investing in infrastructure. Access to models such as GPT-4o and GPT-5 ensures top-tier content quality, while the integration ecosystem makes AI a natural extension of everyday business tools.

At the same time, it’s important to remember that organizations using cloud services are fully dependent on the provider—both for data storage and pricing policies. In regulated industries, the risks associated with processing data in the cloud often prevent organizations from fully leveraging these solutions.

Private LLMs – Full Control and Regulatory Compliance

Privately hosted LLMs (e.g., LLaMA, Mistral, Gemini, Falcon, or the Polish Bielik) meet the needs of organizations requiring full control over data processing. Deployed locally (on-premise) or in private clouds, they allow for architecture customization to meet industry and legal requirements.

Their greatest advantage is regulatory compliance (GDPR, HIPAA) and the ability to fine-tune models for specific business processes. This is the preferred choice of banks, public institutions, and the healthcare sector—anywhere data protection and compliance are top priorities.

RAG – Intelligent Connection Between Models and Company Knowledge

An increasingly popular approach is RAG (Retrieval-Augmented Generation). In this architecture, the LLM functions as the language layer, while responses are generated based on the company’s internal data.

Here’s how it works: a user query triggers a search in a vector database (e.g., Pinecone, Weaviate, FAISS), and the relevant document fragments are dynamically injected into the model’s context. This ensures accuracy, timeliness, and flexibility in swapping LLMs without rebuilding the entire architecture from scratch.

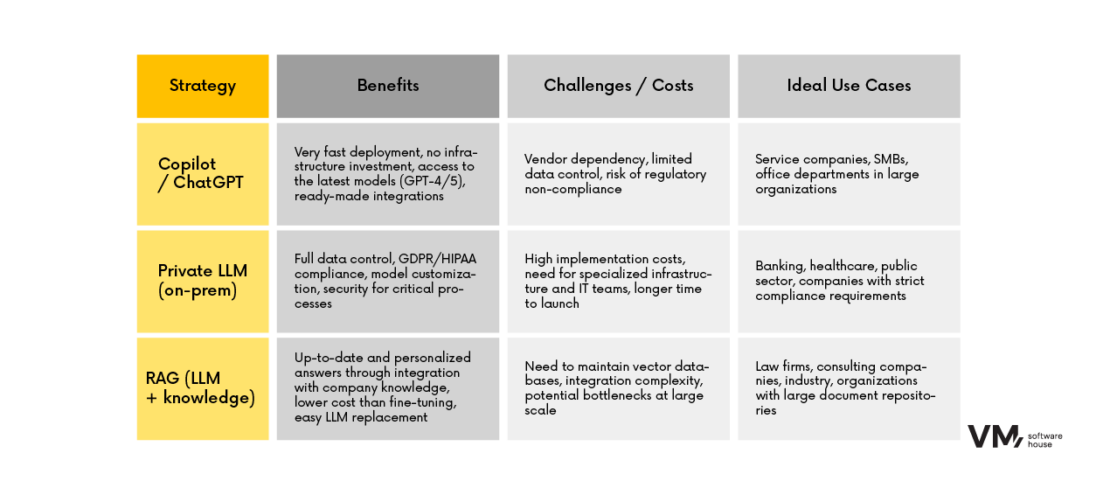

Comparing AI Deployment Strategies – Copilot, LLMs, and RAG

Ready-to-Use AI Solutions for Business – From Copilot to RAG and LLMs

As an AI solutions provider, we deliver the full spectrum of deployment options. We implement Copilot and ChatGPT Enterprise to help companies quickly adopt AI. We also build private LLM installations running both on private cloud and on-premise environments for organizations with strict regulatory requirements.

Our specialty lies in RAG systems with interchangeable LLM layers, ensuring flexibility and full control over data. We use modular architectures that allow easy model replacement, guarantee security and compliance, and optimize costs by aligning technology with business needs.

Example: In the legal sector, we developed a RAG system based on the Mistral model and the client’s private knowledge base. This reduced the time required for document analysis by 35% while maintaining full compliance with data security policies.

An additional advantage of our modular system is the flexibility of the UI layer: it can be a website, a Teams app, a voice assistant, or an MS Copilot integration.

How to Choose the Optimal AI Deployment Path

Selecting the right AI strategy should be preceded by an analysis of key factors. Organizations must consider the nature of their data, regulatory requirements, available budget, and business priorities. Companies seeking quick results can benefit from ready-to-use cloud services. Where compliance and full control are critical, private models are the better choice. Enterprises aiming to combine the power of language models with their own documentation should consider a RAG architecture.

Why Flexibility in AI Deployment (Copilot, ChatGPT, LLM, RAG) Matters

Deployment flexibility is the foundation of effective AI transformation. There is no universal path—different organizations require different approaches. Cloud solutions provide speed, private LLMs ensure control, and RAG systems deliver the most practical link between up-to-date data and language model capabilities.

RAG is becoming the industry standard, but every organization needs a tailored approach. That’s why we support companies in designing and implementing AI architectures adapted to their needs—from proof-of-concept to integration, all the way to full production environments.

Contact us to plan a flexible AI deployment—whether it’s a fast start with Copilot, private LLMs, or RAG systems tailored to your business.