Companies with large product portfolios face increasing challenges in delivering fast, consistent, and cost-efficient customer support. By implementing large language models (LLMs) like ChatGPT, it’s possible to reduce response times by up to 80%, automate ticket resolution, and cut operational costs by as much as 70%. In this article, we walk you through how to seamlessly integrate LLMs into your support team and CRM systems to build a modern, AI-powered self-service solution.

Table of Contents

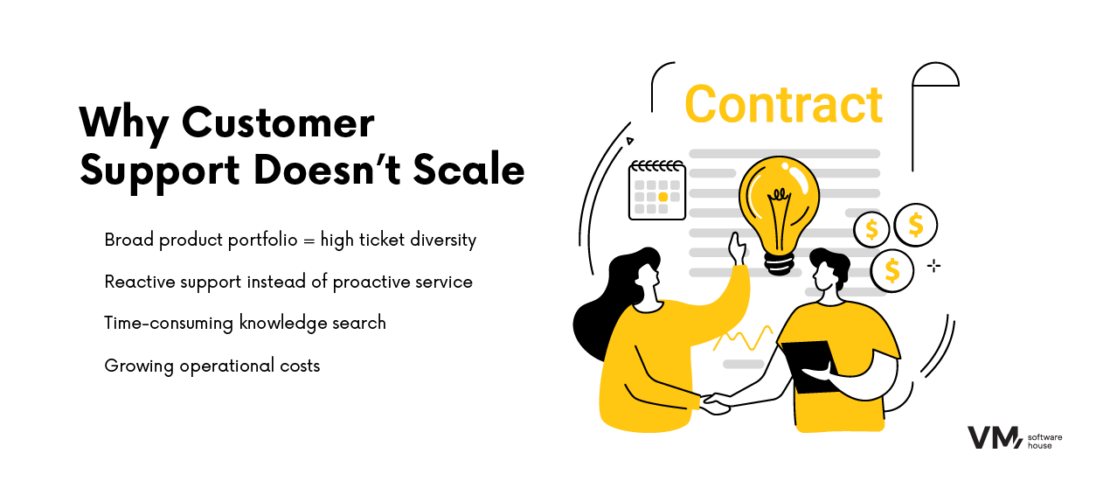

Key Challenges in Customer Support for Companies with Large Product Portfolios

Companies offering a wide range of products have long struggled with effective customer service. Each product line comes with its own set of issues, forcing support teams to handle a huge variety of tickets. In the traditional model, support is reactive: agents respond only once a customer submits a request, and finding the right solution often requires tedious searches through documentation or consulting more experienced colleagues. This approach generates high operational costs, leads to long resolution times, and lowers customer satisfaction.

The solution lies in leveraging large language models (LLMs). When implemented correctly, an LLM can act as a knowledge assistant, drawing on the last five years of historical tickets and technical documentation to suggest the best solutions to agents—and later enabling customers to find answers on their own.

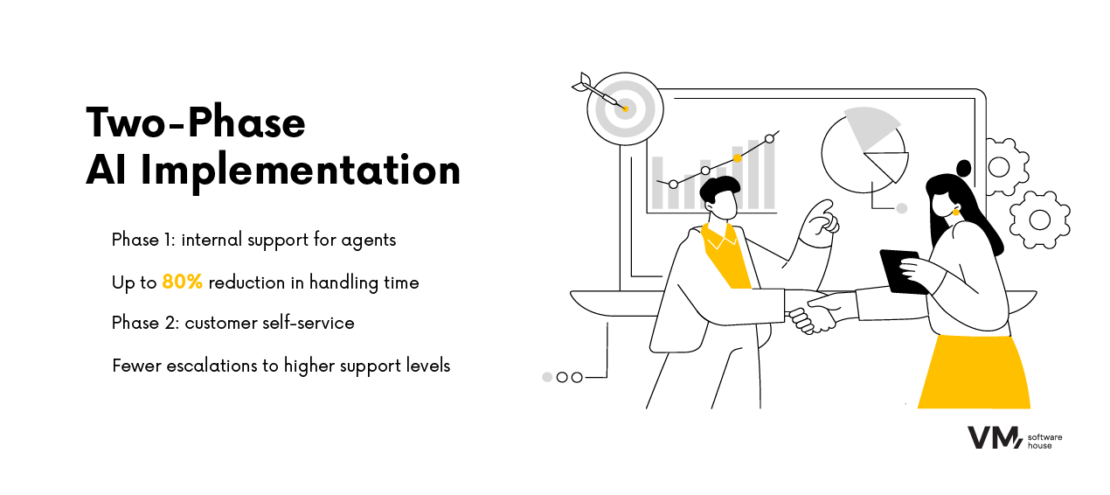

The deployment process consists of two phases. In the first phase, the LLM primarily supports customer service agents, helping them quickly locate solutions and reducing the number of escalations to higher levels. In the second phase, the same system is made available to customers as a self-service tool, allowing them to resolve issues without contacting support. The result: significant improvements in customer experience and a reduction in operating costs.

How Large Language Models (LLMs) Are Transforming Customer Service

Phase One: Internal Support

In the first phase, the language model operates in the background as an internal tool. Agents can use it while handling tickets, dramatically reducing response times. Instead of searching across multiple knowledge sources, the agent simply asks the LLM a question and receives a suggestion within seconds—based on both documentation and insights stored in historical tickets.

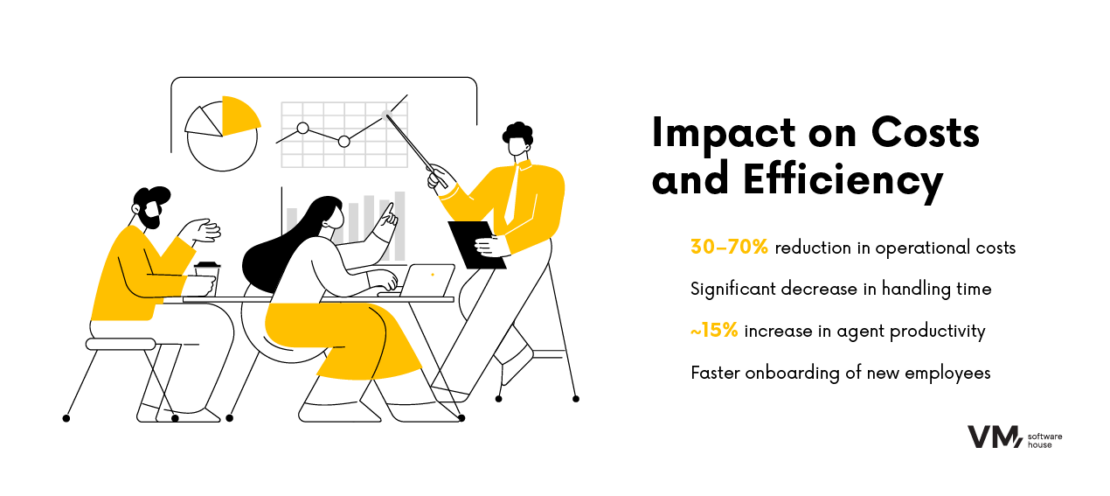

This approach can reduce handling time by up to 80% and cut operating costs by 30–70% . Equally important, it ensures consistency: every agent relies on the same knowledge base, minimizing discrepancies in customer communications.

Studies show that generative AI increases agent productivity by an average of 15%. As a result, new employees ramp up faster, while experienced staff can focus more on solving complex, non-standard cases.

Phase Two: External Support

The second phase is a natural extension of the first. Once the LLM has been validated internally and earned the trust of the support team, it can be made available to customers. In this setup, the model functions as a self-service system via chatbot or help portal. Customers ask questions and instantly receive answers based on documentation and past ticket resolutions.

This approach resolves a significant portion of issues without agent involvement, reducing the workload for support teams and freeing them to focus on more complex matters.

The Technical Side of AI in Customer Service – How It Works in Practice

An LLM functions as an intelligent knowledge assistant. Once the data is properly prepared, the model recognizes query context, connects facts, and provides ready-to-use solutions in real time. A single question is enough for the system to locate relevant cases from the ticket archive and documentation, then deliver a precise answer. Every interaction enriches the model, meaning that response quality improves as more cases are processed.

A key condition for effectiveness is integration with existing tools. The system can connect with CRM platforms, ticketing software, knowledge bases, or internal communication tools so that agents receive answers directly within their daily work environment. For external deployments, the model integrates with customer portals and website chatbots, ensuring end users gain direct access to knowledge without changing their habits.

The Impact of AI and LLMs on Customer Support Costs, Quality, and Response Time

From a business perspective, implementing AI in customer support delivers measurable results. Operating costs can drop by up to 70%, and ticket handling times can be reduced by several dozen percent. These values are confirmed both by global reports and real-world company experiences.

Financial savings are only part of the story. Equally important is improving service quality. Customers who receive fast, accurate answers are more likely to stay loyal and recommend the brand to others. Higher customer satisfaction directly drives revenue, ensuring that AI investment pays off quickly.

Implementing AI in Customer Support: The 4D Framework (Discovery, Definition, Delivery, Direction)

To ensure effectiveness, implementation should follow a proven process. Our 4D method covers four key stages:

- Discovery: We analyze organizational needs and identify customer service pain points. Data is collected from multiple sources—historical tickets, technical documentation, reports, and satisfaction surveys. This helps us map recurring issues and highlight areas where AI can bring the greatest benefits.

- Definition: We determine which processes the model should support first. Priorities are set along with success metrics such as average handling time, first contact resolution (FCR), or customer satisfaction indicators like NPS and CSAT. This ensures clear goals and measurable outcomes from the start.

- Delivery: The LLM assistant is built and integrated with the ticketing system and knowledge base. The model undergoes internal testing and is then launched externally at a limited scale. In parallel, quality tests and monitoring ensure the AI provides precise and valuable answers.

- Direction: The final stage defines the path for further development. The system is continuously improved, with its effectiveness compared against pre-implementation operational costs. ROI indicators are evaluated, and additional features are considered to further boost value. This stage also includes designing a hybrid model, combining AI with human work to ensure both security and the highest quality of service.

Real-World Applications of LLMs in Customer Service and E-Commerce

The use of LLMs in customer support goes far beyond answering technical questions. The system can assist with user onboarding by providing information on configuration and first steps with the product. In technical support, it helps resolve recurring configuration errors, significantly reducing the workload for agents. In retail and e-commerce, it can serve as a product advisor, offering recommendations based on customer needs. In B2B services, it supports the analysis of complex contracts and implementation documentation. This broad range of applications makes LLMs an investment with both fast ROI and long-term value.

Implementing LLMs in customer service benefits more than just the support team. The data processed by the system is a valuable source of insights for other departments. The product team gains visibility into the most common issues, enabling faster improvements. Sales gains a better understanding of customer expectations, helping tailor offerings to real needs. Marketing can leverage knowledge about frequently asked questions to create more targeted educational and promotional materials. As a result, customer support evolves from a contact point into a strategic knowledge hub for the entire organization.

Top AI Implementation Challenges and Practical Recommendations

Deploying LLMs in customer support comes with several challenges. The most important is trust and transparency. Customers must know when they are interacting with AI versus a human agent. Another challenge is answer quality—the system should handle not only factual accuracy but also user context and emotions. A hybrid model is essential, where AI resolves most repetitive issues, but human agents are available for more complex cases.

Employees must not be overlooked. It’s crucial that they view AI as support, not a threat. Involving teams in training and improving the model increases adoption and leads to better results.

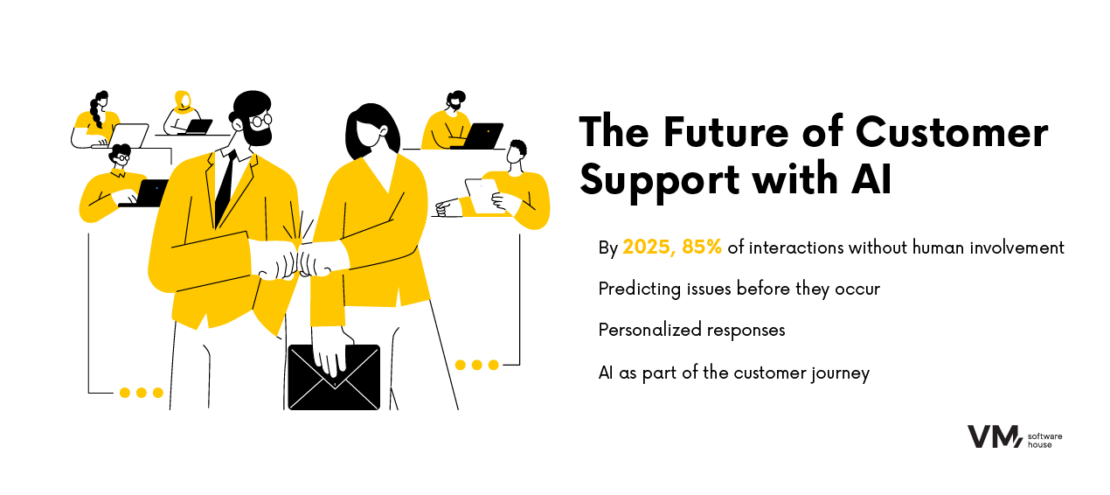

The Future of AI in Customer Support: Prediction, Personalization, and Journey Automation

The evolution of AI in customer service is just beginning. Current deployments focus on faster responses and cost reduction, but in the coming years, more advanced features will emerge. Language models will not only answer questions but also predict problems before they arise, suggest personalized product recommendations, and even support customers in real time while using services or software. AI will become an integral part of the customer journey, and companies that invest today will gain an advantage difficult for competitors to match.

If you’d like to explore how to leverage AI and our 4D method in your organization, contact us—we’ll design the customer service system of the future together.